In the previous tutorial, intro to image processing with CUDA, we examined how easy it is to port simple image processing functions over to CUDA. In this tutorial, we’ll be going over a substantially more complex algorithm, and how to port it to CUDA with incredible ease.

The algorithm

The image processing algorithm we’ll be going over is designed to take an image, and make it appear as though it had been painted. This algorithm has been gone over in the oil painting algorithm article. In a nutshell, for each pixel A, the intensity of all surrounding pixels within a square radius, R, is calculated. After that, the red, green and blue components are added into an appropriate bin, which is calculated by the intensity. The final color of pixel A is simply the average of the colors in the bin with the highest number of pixels. The number of bins and the radius are both input parameters. If this explanation isn’t clear enough, you can take a quick look at the oil painting algorithm article.

Local memory

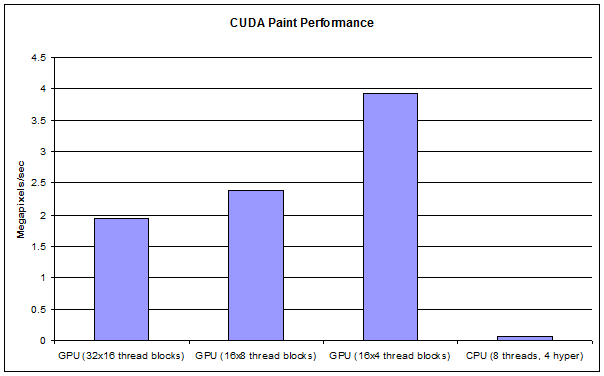

Unlike the previous tutorial, the calculation of each pixel requires a substantial amount of local memory. Effectively, each thread needs to have 4 integer histograms, each with a maximum of 256 elements. This means each thread will need to have 4kB of memory! While the latest CUDA architectures have a cache hierarchy, GPUs do not have much space allocated for cache compare to CPUs. If each thread block contained 32×16=512 threads like the previous tutorial, each thread block would need 2 megabytes of local memory, which is more than the entire GPU has! In order to get the best performance, you will need to do a tiny bit of experimentation. The graphics card I have for testing is a GTX 470, and I found that each thread block having a 16×4 thread configuration worked the best, as was twice as fast as the 32×16 thread configuration.

Large local memory implications

One thing to note is that because each thread needs so much memory, we rely on good caching hierarchy for good performance. While this code may work for CUDA devices of compute capability of 1.3 or earlier, there will be massive performance hits becuase each memory access will need to go to global memory which has a very long latency. But devices of compute capability of 2.0 or greater have a proper cache system, which will enable us to achieve excellent performance without changing the code.

Shared memory

The first CUDA devices with compute capability of 1.3 or earlier, only had shared memory structures, which had to be instantiated and used very carefully. However, nVidia has done an outstanding job of splitting this shared memory into a L1 cache for devices of compute capability 2.0 or later. Because we now have a cache, we don’t have to do anything special to instantiate 4kB of memory for each thread. We simply declare the arrays like we would in any C++ program, and it just works right out of the box.

Results

The CPU only version of this function performs at 0.060726 megapixels per second, running with all 8 threads on an i7-930 processor. In other words, the test image took almost 12.95 seconds to finish. The best CUDA kernel configuration processed the image at 3.91 megapixels per second. To put this in context, we achieve a 64x speedup including all memory copy and kernel invocation overhead, with absolute minimal porting effort. This speedup is the difference between having an incredibly slow application, and a real-time application. To achieve this performance with CPUs alone, you would literally need to have a cluster of computers with 64, i7-930 processors, and you would have to re-write your program to use MPI. However, we managed to achieve this performance with a simple, relatively cheap graphics card. The bulk of the function was literally just copied from the CPU only version. This shows that complex image processing operations which require substantial memory on a per-pixel basis can be easily ported to CUDA and achieve massive speedups!

The CPU only version of this function performs at 0.060726 megapixels per second, running with all 8 threads on an i7-930 processor. In other words, the test image took almost 12.95 seconds to finish. The best CUDA kernel configuration processed the image at 3.91 megapixels per second. To put this in context, we achieve a 64x speedup including all memory copy and kernel invocation overhead, with absolute minimal porting effort. This speedup is the difference between having an incredibly slow application, and a real-time application. To achieve this performance with CPUs alone, you would literally need to have a cluster of computers with 64, i7-930 processors, and you would have to re-write your program to use MPI. However, we managed to achieve this performance with a simple, relatively cheap graphics card. The bulk of the function was literally just copied from the CPU only version. This shows that complex image processing operations which require substantial memory on a per-pixel basis can be easily ported to CUDA and achieve massive speedups!

The code

The code may by accessed on the next page.